Kubernetes Survival Guide

Basic how to commands

kubectl get namespace

kubectl config set-context --current --namespace=kube-system

kubectl version

kubectl cluster-info

kubectl cluster-info dump

kubectl config view

kubectl get nodes

kubectl get node -o wide # more info

kubectl describe nodes # ton of info about nodes

kubectl get deployments

kubectl describe deployment

kubectl get pod

kubectl get pods

kubectl get pods –output=wide # more info, including IPs

kubectl get pod -A # all name space

kubectl get pods --namespace=kube-system # specify name space

kubectl describe pods

kubectl describe pod my_pod1

kubectl get events

kubectl get svc

kubectl get services

kubectl describe services/nginx

kubectl get pod,svc -n kube-system # view pod,svc for kube-system

kubectl delete service hello-node

kubectl delete deployment hello-node

kubectl logs my_pod1

kubectl exec my_pod1 -- env # run command on pod

kubectl exec -ti my_pod1 -- bash # get shell on pod

kubectl exec -ti my_pod1 -- /bin/sh # get shell on pod

kubectl top pods # show pod CPU / Mem

kubectl top pods -A # all name spaces

kubectl top pod my_pod1 # specific pod

kubectl get pod nginx-676b6c5bbc-g6kr7 --template={{.status.podIP}}

Deployments and Services

kubectl create deployment nginx --image=nginx

kubectl expose deployment nginx --type=NodePort --port=80

kubectl expose deployment nginx2 --type=LoadBalancer --port=80

kubectl delete service nginx

kubectl proxy # default 8001 on local host

curl http://localhost:8001/version # or wherever proxy is located

curl http://localhost:8001/api/v1/namespaces/default/pods # info about pods

curl 192.168.3.223:8001/api/v1/namespaces/default/pods/nginx-676b6c5bbc # info about a pod

kubectl label pods nginx-676b6c5bbc version=v1 # label a pod

kubectl get pods -l version=v1 # get pods with a label

kubectl get services -l version=v1 # get services with a label

kubectl delete service -l app=nginx

kubectl get rs # show replica sets

kubectl scale nginx --replicas=4 # scale up

kubectl scale nginx --replicas=2 # scale down

kubectl set image myapp myapp:v2 # update image for deployment

kubectl rollout status myapp # check status of rollout

kubectl rollout undo myapp # roll back if failed

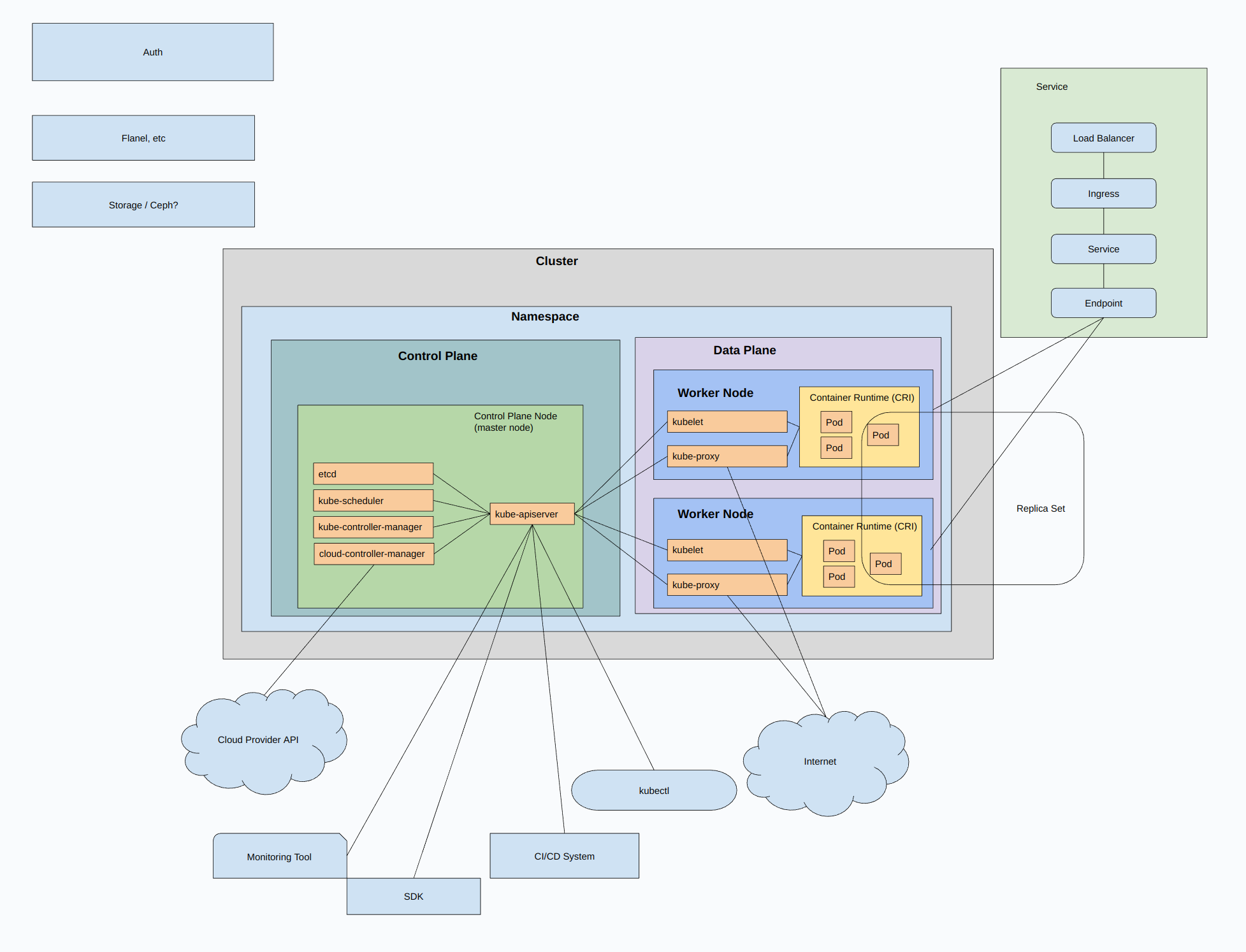

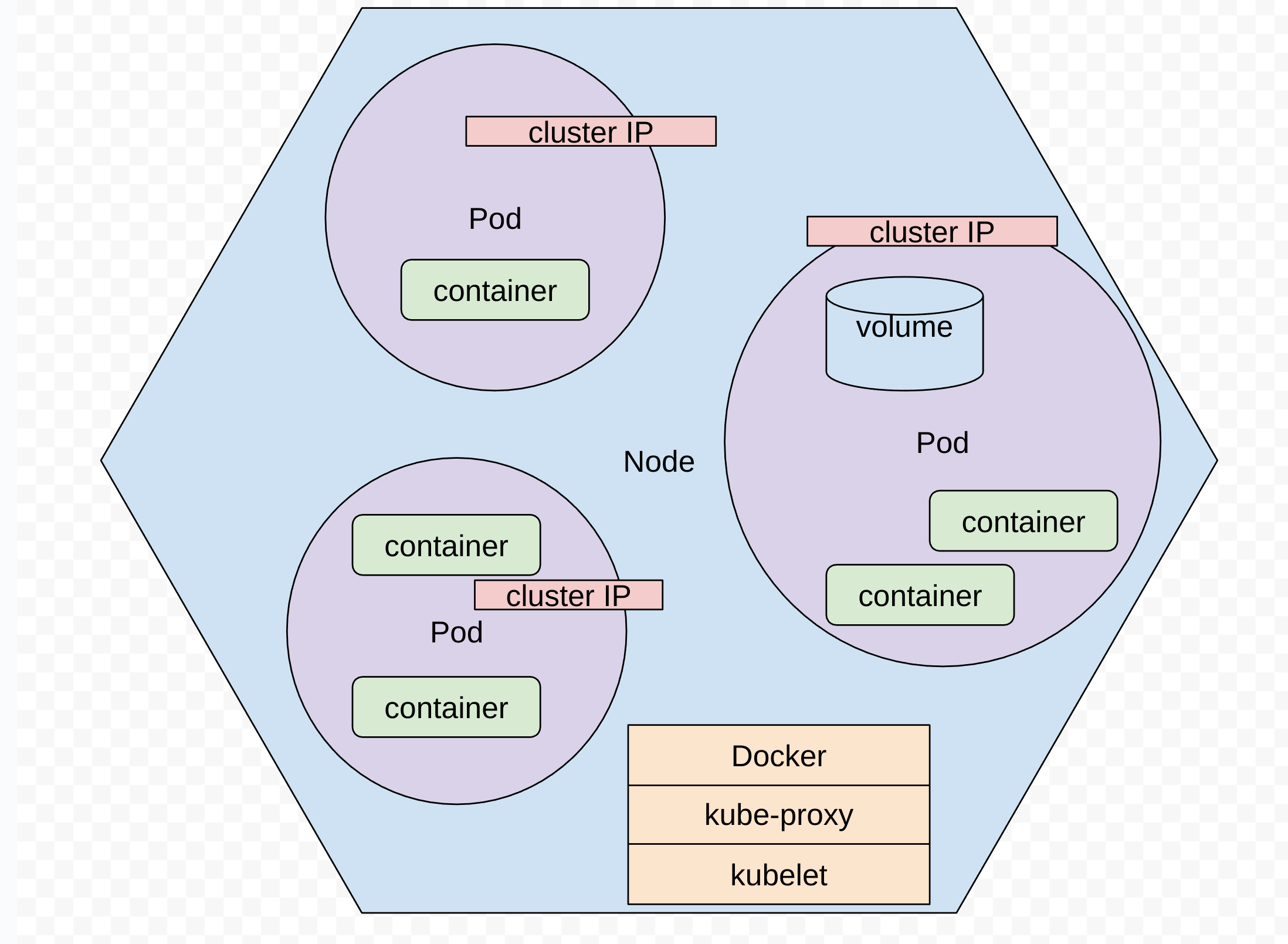

Architecture and terms

How the network fits together

Note:

- containers in a pod shared an IP and connect to each other with localhost, need to coordinate ports

- can ping and TCP connect directly to node IP, cluster IP, or pod IP

- no port or hostport sometimes because exposted port is baked in ( kubectl describe pod xxxx )

Service types:

- ClusterIP (default)

- setup internal IP within cluster - for apps within the cluster

- cluster network / pod network

- also load balances

- NodePort

- specified port setup on each node in cluster ( each node becomes an LB ?? )

- also creates ClusterIP ( automatic )

- Access with: NodeIP:NodePort

- OK if you can access nodes directly

- expose with NAT

- also load balances

- 30000 - 32767

- LoadBalancer

- create load balancer with external IP (NEEDS third party LB!!!!)

- cloud only ????? how to setup manually??????

- also creates NodePort and Cluster IP

- ExternalName - setup a DNS name

- ??????

Communication:

- container-to-container ( localhost )

- Pod-to-Pod - pod network ( cluster network ), connected accross nodes, Container Networking Interface (CNI), CNI Plugins - Calico, Flannel, more

- Pod-to-Service - service proxy - monitors EndpointSlices and routes traffic

- External-to-Service - Gateway API (or its predecessor, Ingress) makes services available outside the cluster

Differnt address ranges:

- pods - IPs assigned by network plugin

- services - IPs assigned by Kube-api server

-

nodes - IPs assigned by kublet or cloud-controller-manager

- all pods can connect to all other pods

- agents on a node (ex kubelet) can connect to all pods on that node

How some things connect

external LB = node IP ( on 31009 ) = Cluster IP ( on 80 ) = pod IP ( on 80 )

???? = 192.168.3.206:31009 = 10.43.143.240:80 = 10.42.0.20:80

10.42.0.18:80

10.42.0.22:80

10.42.0.21:80

Basic Manifests

Deployment field explaination:

.spec.replicas

.spec.selector # field defines how the created ReplicaSet finds which Pods to manage

.spec.selector.matchLabels

.spec.template # pod template

.spec.template.metadata.labels # pods are labled with this

.spec.template.spec.containers # container list for pod

Deployment, service, and ingress all as one file:

apiVersion: apps/v1

kind: Deployment

metadata:

name: myapp-nginx

labels:

app: myapp-nginx

spec:

replicas: 3

selector:

matchLabels:

app: myapp-pod-nginx

template:

metadata:

labels:

app: myapp-pod-nginx

spec:

containers:

- name: nginx

image: nginx

ports:

- containerPort: 80

---

apiVersion: v1

kind: Service

metadata:

name: myapp-nginx-service

spec:

selector:

app: myapp-nginx

ports:

- protocol: TCP

port: 80

---

apiVersion: traefik.containo.us/v1alpha1

kind: IngressRoute

metadata:

name: myapp-nginx-ingress

spec:

entryPoints:

- web

routes:

- match: Path(`/`)

kind: Rule

services:

- name: myapp-nginx-service

port: 80

Apply manifests or remove:

kubectl apply -f myapp.yaml

kubectl delete -f myapp.yaml

Service Types

Basic ClusterIP Service (Default)

apiVersion: v1

kind: Service

metadata:

name: my-service

spec:

selector:

app: my-app # Selects pods with this label

ports:

- protocol: TCP

port: 80 # Port exposed by the service

targetPort: 8080 # Port where the application is running in the container

type: ClusterIP # Default type (internal access only)

NodePort Service (Exposes on Each Node)

apiVersion: v1

kind: Service

metadata:

name: my-nodeport-service

spec:

selector:

app: my-app

ports:

- protocol: TCP

port: 80

targetPort: 8080

nodePort: 30007 # Optional; Kubernetes assigns a random port if omitted (range: 30000-32767)

type: NodePort

LoadBalancer Service (For Cloud Providers)

apiVersion: v1

kind: Service

metadata:

name: my-loadbalancer-service

spec:

selector:

app: my-app

ports:

- protocol: TCP

port: 80

targetPort: 8080

type: LoadBalancer

ExternalName Service (For External DNS Resolution)

apiVersion: v1

kind: Service

metadata:

name: external-service

spec:

type: ExternalName

externalName: example.com # Redirects requests to example.com

Headless Service (No Load Balancing, Direct Pod Access)

apiVersion: v1

kind: Service

metadata:

name: headless-service

spec:

selector:

app: my-db

clusterIP: None # Disables load balancing

ports:

- port: 5432

targetPort: 5432

Deployment / Service Options

in service:

ports:

- protocol: TCP

port: 80 # port on Cluster IP ( VIP )

targetPort: 9376 # port on pods ( if ommitted will be set to match port )

in deployment, name a port:

ports:

- containerPort: 80

name: http-web-svc

in service, use named port:

- name: name-of-service-port

protocol: TCP

port: 80

targetPort: http-web-svc

More Traefik Routing

- Alternate path/route with Traefik ingress

- Also removes prefix using Middleware

---

apiVersion: traefik.containo.us/v1alpha1

kind: IngressRoute

metadata:

name: app2-nginx-ingress

spec:

entryPoints:

- web

routes:

- match: PathPrefix(`/docs`)

kind: Rule

services:

- name: app2-nginx-service

port: 80

middlewares:

- name: app2-stripprefix

---

apiVersion: traefik.containo.us/v1alpha1

kind: Middleware

metadata:

name: app2-stripprefix

spec:

stripPrefix:

prefixes:

- /docs

Volumes

- many different types of volumes exist, many are deprecated

- subPath property exists

Some things:

- Ephemeral Volumes

- PV - PersistentVolume

- PVC - PersistentVolumeClaim

Ephemeral Volumes types:

- configMap

- more ….

PersistentVolume types:

- csi - Container Storage Interface (CSI)

- fc - Fibre Channel (FC) storage

- hostPath - HostPath volume (for single node testing only; WILL NOT WORK in a multi-node cluster; consider using local volume instead)

- iscsi - iSCSI (SCSI over IP) storage

- local - local storage devices mounted on nodes.

- nfs - Network File System (NFS) storage

PersistentVolumeClaim:

- basically like an interface between deployment and volume

- Abstraction - Decouples Storage from Applications

- Dynamic Provisioning with StorageClasses

- Portability & Flexibility

- Security & Access Control: Namespace Isolation and RBAC (Role-Based Access Control)

- Namespace scoped and not cluster wide like PersistentVolume

- Lifecycle Management: automatic cleanup and reclaim policy

- Developer may have access to PersistentVolumeClaim but not directly to PersistentVolume

- May dynamically allocate a PersistentVolume

Access Modes

- RWO - ReadWriteOnce - r/w for multiple pods on same node

- RWOP - ReadWriteOncePod - similar but for single pod instead of single node

- ROX - ReadOnlyMany - RO by many

- RWX - ReadWriteMany - RW by many

Reclaim Policy

- Retain – manual reclamation

- Recycle – basic scrub (rm -rf /thevolume/*)

- Delete – delete the volume

StorageClass

- Can be used to sepcify how volumes should be allocated

- PVC uses details provided by StorageClass to allocate PV

PersistentVolume

kubectl get pv

kubectl get pvc

PersistentVolume example:

apiVersion: v1

kind: PersistentVolume

metadata:

name: my-pv

spec:

capacity:

storage: 10Gi

accessModes:

- ReadWriteOnce # This PV can be mounted as read-write by a single node

persistentVolumeReclaimPolicy: Retain # Options: Retain, Recycle, Delete

storageClassName: standard # Should match PVC’s storageClassName

hostPath:

path: "/mnt/data" # Using a hostPath (for testing only, not for production)

Instead of hostPath:

spec:

awsElasticBlockStore:

volumeID: vol-0123456789abcdef0

fsType: ext4

spec:

gcePersistentDisk:

pdName: my-disk

fsType: ext4

spec:

nfs:

server: 10.0.0.1

path: "/exported/path"

PersistentVolume example with NFS:

apiVersion: v1

kind: PersistentVolume

metadata:

name: pv0003

spec:

capacity:

storage: 5Gi

volumeMode: Filesystem

accessModes:

- ReadWriteOnce

persistentVolumeReclaimPolicy: Recycle

storageClassName: slow

mountOptions:

- hard

- nfsvers=4.1

nfs:

path: /tmp

server: 172.17.0.2

Using a volume:

apiVersion: apps/v1

kind: Deployment

metadata:

name: mysite-nginx

labels:

app: mysite-nginx

spec:

replicas: 1

selector:

matchLabels:

app: mysite-nginx

template:

metadata:

labels:

app: mysite-nginx

spec:

containers:

- name: nginx

image: nginx

ports:

- containerPort: 80

volumeMounts:

- name: html-volume

mountPath: /usr/share/nginx/html

Same but define volume together with pods:

.....

.....

spec:

containers:

- name: nginx

image: nginx

ports:

- containerPort: 80

volumeMounts:

- name: html-volume

mountPath: /usr/share/nginx/html

volumes:

- name: html-volume

configMap:

name: mysite-html

Same but using a volume claim:

.....

.....

spec:

containers:

- name: nginx

image: nginx

ports:

- containerPort: 80

volumeMounts:

- name: html-volume

mountPath: /usr/share/nginx/html

volumes:

- name: app1-persistent-storage

persistentVolumeClaim:

claimName: app1-pv-claim

PersistentVolumeClaim

apiVersion: v1

kind: PersistentVolumeClaim

metadata:

name: my-pvc

spec:

accessModes:

- ReadWriteOnce

resources:

requests:

storage: 5Gi

storageClassName: standard # This triggers dynamic provisioning

PersistentVolumeClaim example:

apiVersion: v1

kind: PersistentVolumeClaim

metadata:

name: foo-pvc

namespace: foo

spec:

storageClassName: "" # Empty string must be explicitly set otherwise default StorageClass will be set

volumeName: foo-pv

StorageClass

kubectl get storageclass

kubectl describe storageclass fast-ssd

Create a storage class ( local ):

apiVersion: storage.k8s.io/v1

kind: StorageClass

metadata:

name: local-storage

provisioner: kubernetes.io/no-provisioner # No dynamic provisioning, must create PV manually

volumeBindingMode: WaitForFirstConsumer # Ensures the volume is bound when a pod is scheduled

Create a storage class ( AWS ):

apiVersion: storage.k8s.io/v1

kind: StorageClass

metadata:

name: fast-ssd

provisioner: kubernetes.io/aws-ebs # The backend storage provider

parameters:

type: gp3 # SSD with good performance

iopsPerGB: "50" # Set IOPS per GB

encrypted: "true"

reclaimPolicy: Delete # PV is deleted when PVC is deleted

volumeBindingMode: WaitForFirstConsumer # Delays binding until the pod is scheduled

Use storage class from PVC:

apiVersion: v1

kind: PersistentVolumeClaim

metadata:

name: my-dynamic-pvc

spec:

accessModes:

- ReadWriteOnce

resources:

requests:

storage: 10Gi

storageClassName: fast-ssd # This triggers dynamic provisioning

StatefulSets

- like a deployment but with “persistent storage or a stable, unique network identity”

- predictable, stable hostnames

- volumens created automatically if cluster is configured to dynamically provision PersistentVolumes

- Many clusters have a default StorageClass

- deleteing statefulset doesn’t delete storage

-

storage needs to be provisioned by PersistentVolumen Provisioner or pre-provisioned

- !!!! “In local clusters, the default StorageClass uses the hostPath provisioner. hostPath volumes are only suitable for development and testing. With hostPath volumes, your data lives in /tmp on the node the Pod is scheduled onto and does not move between nodes. If a Pod dies and gets scheduled to another node in the cluster, or the node is rebooted, the data is lost.”

kubectl get pvc -l app=nginx # show PersistentVolumeClaims

kubectl delete statefulset web # remove StatefulSet

kubectl delete statefulset web --cascade=orphan # remove StatefulSet without removing pods

StatefulSet example:

apiVersion: v1

kind: Service

metadata:

name: nginx

labels:

app: nginx

spec:

ports:

- port: 80

name: web

clusterIP: None

selector:

app: nginx

---

apiVersion: apps/v1

kind: StatefulSet

metadata:

name: web

spec:

serviceName: "nginx"

replicas: 2

selector:

matchLabels:

app: nginx

template:

metadata:

labels:

app: nginx

spec:

containers:

- name: nginx

image: registry.k8s.io/nginx-slim:0.21

ports:

- containerPort: 80

name: web

volumeMounts:

- name: www

mountPath: /usr/share/nginx/html

volumeClaimTemplates:

- metadata:

name: www

spec:

accessModes: [ "ReadWriteOnce" ]

resources:

requests:

storage: 1Gi

Helm

Helm - Kubernetes package manager

Helm:

sudo snap install helm --classic

Dashboard

helm repo add kubernetes-dashboard https://kubernetes.github.io/dashboard/

helm upgrade --install kubernetes-dashboard kubernetes-dashboard/kubernetes-dashboard --create-namespace --namespace kubernetes-dashboard

helm upgrade --kubeconfig /etc/rancher/k3s/k3s.yaml --install kubernetes-dashboard kubernetes-dashboard/kubernetes-dashboard --create-namespace --namespace kubernetes-dashboard

kubectl -n kubernetes-dashboard port-forward svc/kubernetes-dashboard-kong-proxy 8443:443

wget https://localhost:8443

https://192.168.3.228:8443/

kubectl get ingressroute -A

kubectl describe ingressroute.traefik.containo.us/dash-ingress

root@template-host:~# cat test2.yaml

apiVersion: v1

kind: Service

metadata:

name: dash-service

spec:

selector:

app: kubernetes-dashboard-kong

ports:

- protocol: TCP

port: 8443

targetPort: 8443

type: NodePort

kubectl -n kubernetes-dashboard create token kubernetes-dashboard-kong

kubectl -n kubernetes-dashboard create token default

probably needs a servcie account for this: Kubernetes API server.

kubectl create serviceaccount test-user

kubectl create clusterrolebinding test-user-binding --clusterrole=cluster-admin --serviceaccount=default:test-use

kubectl -n kubernetes-dashboard create token test-user

kubectl proxy # access from client

Admin User for Dashboard ( this worked ):

apiVersion: v1

kind: ServiceAccount

metadata:

name: admin-user

namespace: kubernetes-dashboard

---

apiVersion: rbac.authorization.k8s.io/v1

kind: ClusterRoleBinding

metadata:

name: admin-user

roleRef:

apiGroup: rbac.authorization.k8s.io

kind: ClusterRole

name: cluster-admin

subjects:

- kind: ServiceAccount

name: admin-user

namespace: kubernetes-dashboard

Apply it and get token:

224 vi user.yaml

225 kubectl apply -f user.yaml

226 kubectl -n kubernetes-dashboard create token admin-user

# aleternate to grab secret:

kubectl get secret -n kubernetes-dashboard $(kubectl get serviceaccount admin-user -n kubernetes-dashboard -o jsonpath="{.secrets[0].name}") -o jsonpath="{.data.token}" | base64 --decode

- NOTE - Can also create a read only user.

Create my own app and add to kubernetes

Install Harbor

helm repo add harbor https://helm.goharbor.io

helm fetch harbor/harbor --untar

helm install my-release harbor/

helm --kubeconfig /etc/rancher/k3s/k3s.yaml install my-release harbor/

315 kubectl create namespace harbor

316 kubectl config set-context --current --namespace=harbor

317 helm --kubeconfig /etc/rancher/k3s/k3s.yaml install my-release harbor/

Nodeport Service for Harbor

root@template-host:~# vi test3.yaml

root@template-host:~# kubectl apply -f test3.yaml

service/harbor-service created

root@template-host:~# cat test3.yaml

apiVersion: v1

kind: Service

metadata:

name: harbor-service

spec:

selector:

app: my-release-harbor-core

ports:

- protocol: TCP

port: 8080

targetPort: 8080

type: NodePort

root@template-host:~#

Registry Setup in Docker

https://distribution.github.io/distribution/

docker run -d -p 5000:5000 \

--restart=always \

-v /mnt/registry:/var/lib/registry \

--name registry registry:2 # start registry

docker pull ubuntu # pull image from docker hub

docker image tag ubuntu localhost:5000/myfirstimage # tag for my registry

docker push localhost:5000/myfirstimage # push image to my registry

docker pull localhost:5000/myfirstimage # pull image from my registry

using nodeport IP/Port:

docker image tag ubuntu 192.168.3.228:30844/myfirstimage

docker push 192.168.3.228:30844/myfirstimage

docker pull 192.168.3.228:30844/myfirstimage

Needed to allow docker to use http instead of https:

root@template-host:~# cat /etc/docker/daemon.json

{

"insecure-registries": ["192.168.3.228:30844"]

}

systemctl restart docker

Registry Setup in Kubernetes:

root@template-host:~# cat test_docker_reg.yaml

apiVersion: apps/v1

kind: Deployment

metadata:

name: myreg

labels:

app: myreg

spec:

replicas: 1

selector:

matchLabels:

app: myreg

template:

metadata:

labels:

app: myreg

spec:

containers:

- name: registry

image: registry

ports:

- containerPort: 5000

---

apiVersion: v1

kind: Service

metadata:

name: myreg

spec:

selector:

app: myreg

ports:

- protocol: TCP

port: 5000

K3s - Use Registry

Tell k3s to use the new registry:

root@template-host:~# systemctl restart k3s

root@template-host:~# cat /etc/rancher/k3s/registries.yaml

mirrors:

"192.168.3.228:30844":

endpoint:

- "http://192.168.3.228:30844"

root@template-host:~#

kubectl create deployment test1 --image=myfirstimage # should work

Dockerize my Own App

cd app1

vi Dockerfile

FROM ubuntu

RUN apt update

RUN apt install -y python3

COPY index.html /

CMD /usr/bin/python3 -m http.server 8000

vi index.html

test page

docker build -t app1 .

docker run -tid -p 8000:8000 app1

curl localhost:8000

docker image tag app1 192.168.3.228:30844/app1

docker push 192.168.3.228:30844/app1

## Probably wasn't needed at all: !!!!!!!!!!!!!

docker save -o myimage.tar 192.168.3.228:30844/app1:latest

docker load -i myimage.tar

Deployment and Servcice for my app in Kubernetes

root@template-host:~# cat app1.yaml

apiVersion: apps/v1

kind: Deployment

metadata:

name: app1

labels:

app: app1

spec:

replicas: 1

selector:

matchLabels:

app: app1

template:

metadata:

labels:

app: app1

spec:

containers:

- name: app1

image: 192.168.3.228:30844/app1:latest

ports:

- containerPort: 8000

---

apiVersion: v1

kind: Service

metadata:

name: app1

spec:

selector:

app: app1

ports:

- protocol: TCP

port: 8000

type: NodePort

root@template-host:~#

Basic fixes / troubleshooting

kubectl top node

kubectl top pod

kubectl top pod -A

- CPU cores listed in millicpu ( 1000m is equal to 1 CPU )

kubectl get pod app1-6f6446f697-n6fs5 -o jsonpath="{.spec.containers[*].resources}"

Set limits for pods in a deployment:

apiVersion: apps/v1

kind: Deployment

metadata:

name: app1

labels:

app: app1

spec:

replicas: 1

selector:

matchLabels:

app: app1

template:

metadata:

labels:

app: app1

spec:

containers:

- name: app1

image: 192.168.3.228:30844/app1:latest

ports:

- containerPort: 8000

resources:

requests:

memory: "64Mi"

cpu: "250m"

ephemeral-storage: "2Gi"

limits:

memory: "128Mi"

cpu: "500m"

ephemeral-storage: "4Gi"

volumeMounts:

- name: ephemeral

mountPath: "/tmp"

volumes:

- name: ephemeral

emptyDir:

sizeLimit: 500Mi

Check these things:

journalctl -u k3s # logs for k3s

kubectl logs app1-6f6446f697-n6fs5 # logs for a pod

root@template-host:~# kubectl logs app1-6f6446f697-n6fs5 --all-containers

root@template-host:~# kubectl logs app1-6f6446f697-n6fs5 -c app1

kubectl events # check events

kubectl describe pod the-pod-name # can also get details on issues from this

Crashloop

- CrashLoopBackOff

- Task keeps crashing and is continously restarted by the watchdog process ( kubernetes ).

- backoff time - delay between restarts that gets longer and longer until max delay of 5 mins.

Remove a PV ( potentially force remove ??)

kubectl delete pv pvc-b5f9e6b1-882f-48de-81b2-18cb121ed52b

kubectl patch pv pvc-3efea8fe-5ed7-41df-ad72-cb4360065b4d -p ‘{“metadata”:{“finalizers”:null}}’ -n harbor